The European Union’s Medical Device Regulation (MDR) 2017/745 has significantly transformed the regulatory affairs landscape for medical device manufacturers by establishing strict requirements to ensure the safety and efficacy of devices before they reach the European market. These regulations demand detailed technical documentation, comprehensive clinical evaluations, and proactive post-market surveillance, creating substantial resource and time challenges, particularly for small and medium-sized enterprises (SMEs).

As in 2024, the regulatory field is progressively exploring the role of technological disruptors like machine learning (ML) and artificial intelligence (AI) to meet the demands of the ever-evolving regulatory landscape more efficiently. Large Language Models (LLMs) like ChatGPT are emerging as valuable tools in regulatory processes, to make them more efficient. These tools can assist with everything from technical document creation to risk assessment and usability testing. With recent advancements in prompt engineering and AI training, ChatGPT can help regulatory teams streamline processes, improve compliance, and reduce human resource demands.

Medical Device Regulation and Regulatory Challenges

Three years after the Medical Device Regulations’ (MDR’s) coming into force, device manufacturers and stakeholders still encounter a number of difficulties, especially concerning CE marking – a requirement for devices to be marketed within the EU. The MDR makes provision for complete technical documentation, risk assessment, and post-market monitoring for the device compliance, which creates a huge financial and operational strain on manufacturers, especially small and medium enterprises (SMEs). In order to comply with such exaggerated demands, a number of organizations are looking into the possibilities of AI to lessen the human resource burden and improve the efficiency of processes.

Artificial Intelligence and Regulatory Affairs

Artificial Intelligence (AI), specifically LLMs like ChatGPT, offers promising applications in regulatory affairs, assisting in document drafting, risk assessment, usability verification, and data collection. Following are some key areas where AI can enhance regulatory tasks:

- Technical Documentation: Drafting accurate technical documents requires time and expertise. ChatGPT can assist in preparing technical reports, safety and performance checklists, and risk management plans.

- Risk Analysis: By identifying potential risks and suggesting mitigation strategies, ChatGPT can support risk analysis, potentially saving time and resources.

- Clinical Evaluation: ChatGPT can summarize scientific literature, organize clinical findings, and help develop clinical evaluation reports, an essential part of the CE marking process.

- Usability Testing: AI can generate prompts for usability tests, directing attention to critical aspects of device operation, packaging, and pharma regulatory labeling that affect user comprehension and safety.

- Post-Market Surveillance: ChatGPT can assist in designing and interpreting surveys that capture data on device efficacy and safety from users in real-world conditions.

Artificial Intelligence Performance Assessment in Regulatory Affairs

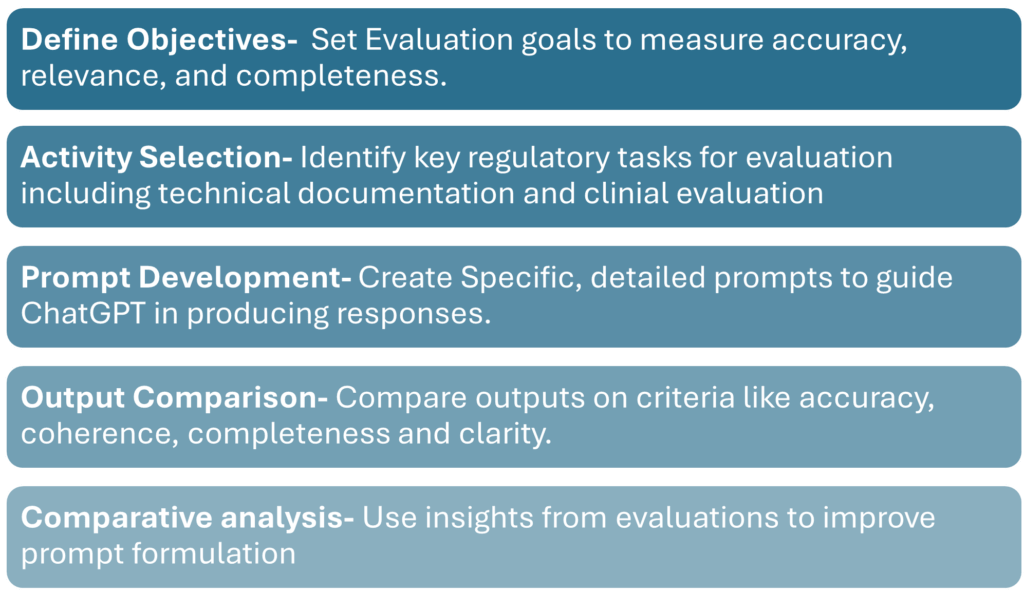

To assess ChatGPT’s effectiveness, tests were conducted comparing AI-generated outputs with industry expert-generated reports mentioned in a research article by Fabio di Bello. The following methodology provided insights into LLM performance and reliability:

This structured evaluation revealed that, while ChatGPT could support some regulatory activities, it required further refinement in tasks demanding high specificity or nuanced judgment.

To illustrate these capabilities, here are some selected case studies from the research article “Enhancing Regulatory Affairs in the Market Placement of New Medical Devices: How LLMs Like ChatGPT May Support and Simplify Processes,” which offer real-world examples of LLMs aiding regulatory workflows and improving compliance strategies.

Case Study 1: AI-Assisted Survey for Post-Market Surveillance

A case study was conducted where ChatGPT generated a survey to assess the safety and efficacy of a gynecological cream. The AI-generated survey covered aspects of clinical symptoms and user feedback but fell short in providing a quantitative assessment framework essential for robust statistical analysis. This limitation underscored the importance of enhancing prompts with specific, quantitative parameters for reliable output.

Case Study 2: Usability Testing for Medical Devices

In a separate usability test on breast implants, ChatGPT was prompted to create a questionnaire for users to evaluate the ease of device operation and the clarity of instructions. While initial outputs were generic, follow-up prompts focusing on step-by-step instructions improved specificity, though human refinement was needed to align the AI-generated questionnaire with regulatory expectations.

Token Management and Optimization Techniques

A challenge in using LLMs is the model’s token limit that can restrict response length and completeness. It requires effective prompt engineering for optimizing token usage, focusing on conciseness, relevance, and clarity. Techniques such as iterative responses, segmentation of prompts, and focus on key topics can help maximize output quality within token constraints.

Challenges Associated with Large Language Models (LLMs) in Medicine

Privacy Risks: LLMs are trained on vast datasets, including web-scraped data, which may contain personal or health information. In the European Union, the General Data Protection Regulation (GDPR) limits access to personal health data, prompting investigations into models like ChatGPT for compliance. The United States faces similar challenges under HIPAA, especially when protected health information may be involved in prompts submitted to LLMs, raising privacy concerns about its compliance with global data protection laws.

Device Regulation: Determining whether the LLM qualifies as a medical device depends on its intended use. General-purpose LLMs may not be classified as medical device services, and those designed for medical applications may face strict regulatory scrutiny, especially in adaptive learning.

Intellectual Property Issues: The lack of clarity around intellectual property rights in LLM development can restrain innovation. Overly strict IP protections might limit market entry, while limited protections could discourage investment due to uncertain exclusivity.

Cybersecurity and Liability: While LLMs could improve cybersecurity by detecting vulnerabilities, they also pose risks for manipulation and misinformation. Regulatory agencies must establish cybersecurity standards and clarify liability to mitigate risks of misuse and fraud.

Adapting LLMs in Regulatory Affairs

AI’s integration into regulatory processes requires continuous model evolution and user adaptability. Effective LLM use depends on evolving model capabilities, access to updated regulatory information, and improved algorithms. As AI technologies advance, regular training and adaptation to new developments will be essential to maintaining performance standards.

Conclusion

The potential of LLMs in regulatory affairs services is evident in their ability to streamline documentation, support clinical evaluation, and manage medical devices post-market surveillance. However, these tools require a strategic approach, combining prompt engineering, user training, and a robust understanding of AI limitations. By optimizing token usage and refining prompts, regulatory professionals can harness AI to enhance operational efficiency, reduce human resource demands, and improve compliance with MDR standards.

Future Directions

Ongoing advancements in LLM technology, access to real-time data, and enhanced regulatory algorithms will shape AI’s role in regulatory affairs. With the potential for continuous improvement in AI capabilities, stakeholders in the medical device industry can look forward to AI becoming a reliable partner in regulatory compliance and efficiency.

Reach out to DDReg to discover how we can help your organization stay ahead of regulatory requirements, reduce time-to-market, and enhance product safety and effectiveness. Let’s work together to shape a compliant and innovative future for medical devices. Read more from us here: Navigating EU Classification Standards for AI in Medical Devices and Diagnostics